So you decided to launch a new app. You secured funding, developed the product, and are ready to hit the market. At this point, you may think that you're done with the hardest part, but that can change fast if your app gets pulled from the app store.

Apple and Google have set strict guidelines around how apps must be developed, updated, and promoted. These requirements are meant to support the high-quality standards of both stores, but can throw a few curveballs into your publishing process.

Now, let's discuss the most common reasons why your app can get booted from app stores and how you can avoid this scenario in the first place.

Key takeaways

- Google Play and App Store can delist the app due to various reasons, including malware, restricted content, copyright violations, and impersonation.

- Overall, App Store is more strict when it comes to privacy, security, and content quality, but Google Play is catching up with its new guidelines and review policies.

- You can absolutely resubmit the app once you've fixed the reason behind its removal.

Malware slips into the app

Apple and Google are constantly perfecting their algorithms to find applications with malicious content. Even if you're not intentionally adding malicious features, your app could still be flagged.

Many developers violate policies without realizing it. This is often the case when apps implement third-party code from unreliable SDKs that inject disruptive ads or spyware that secretly collects user data. For example, in 2023, security company ESET found 17 malware-laced loan apps on popular app stores and on third-party ones.

In 2024, security experts at Zscaler discovered over 90 malicious banking apps on the Google Play store that chalked up 5.5 million installations. These apps had the Anatsa banking trojan, known for stealing people's e-banking credentials.

No wonder app stores have become more vigilant and suspicious of applications with undocumented features. In 2024, the Apple App Store rejected 116,105 out of 1,931,400 due to safety reasons. On the Google Play Store, 2.36 million policy-violating apps were removed in 2024, with 158,000 developer accounts blocked.

That's why you must vet all third-party code when developing an app and check all the boxes in terms of data privacy and security, including data minimization techniques, secure storage, and other safeguards.

The app contains disruptive adware

Disruptive adware overwhelms the app, or even the device itself, with aggressive, intrusive ads. This may look like an app that blasts ads while a user tries to make a phone call or unlock their device.

In 2022, security experts caught 75 apps on Google Play and another ten iOS apps in the act of fraudulent ad activity. In 2023, 43 apps, including TV/DMB Player, Music Downloader, and others, were taken down by Google Play due to hidden ad malware (they loaded ads while a phone's screen was off).

The app is a copycat

Apps that clone existing products without adding any meaningful value aren't welcome on the App Store and Google Play.

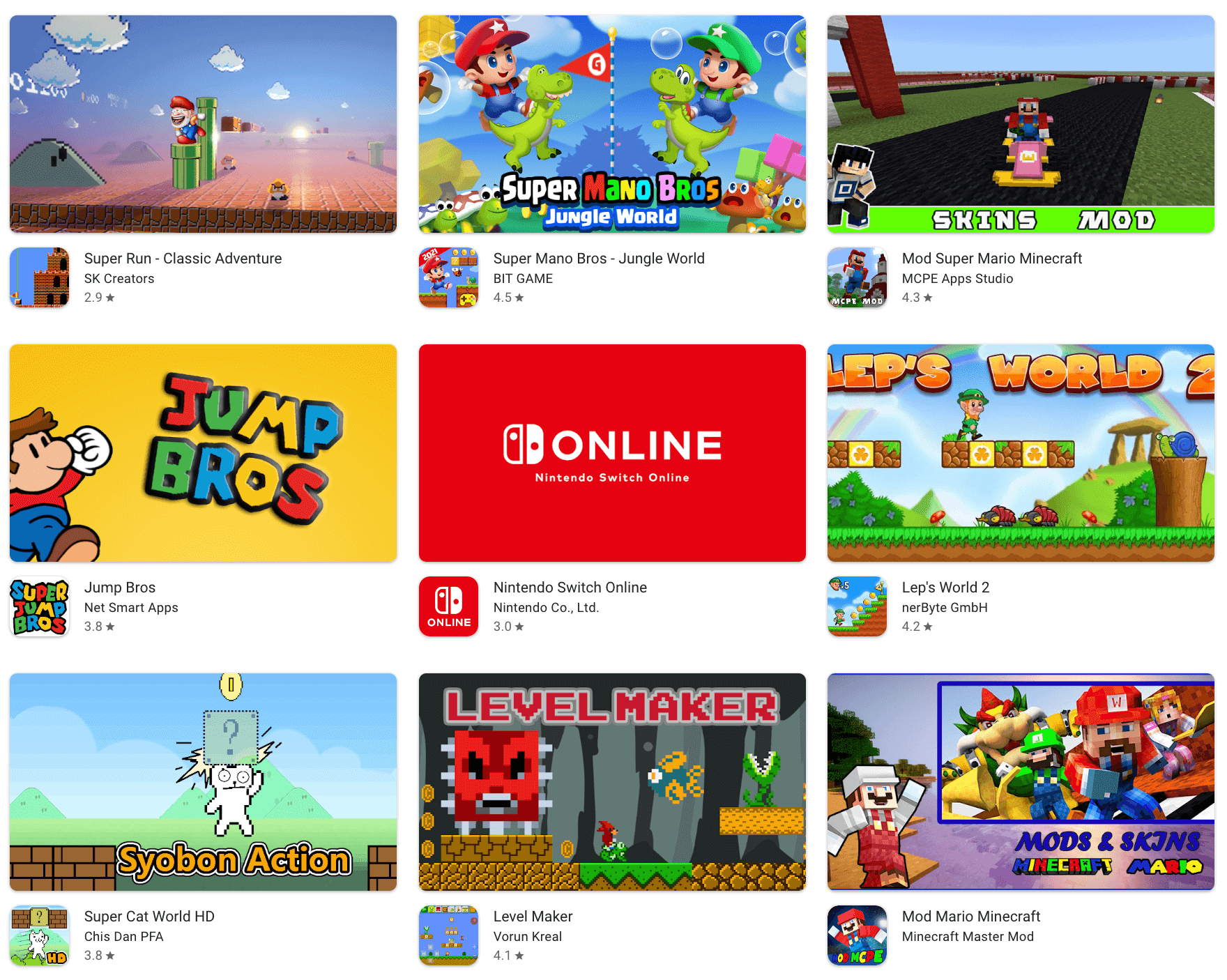

For instance, if you type in the famous "Mario" brand on Google Play, you'd be surprised at the number of off-brand Mario lookalikes. Many of these apps likely violate Nintendo's trademark, copyright, or other intellectual rights.

ChatGPT's popularity also became the reason behind a wave of clone apps flooding both Google Play and the App Store. To battle clones, Apple tightened its rules, now classifying impersonations as a severe violation of the store's rules.

In general, such infringements can be related to images or music, gameplay mechanics, or character and brand names. Most of these apps mean no harm to the user and are just designed to capitalize on brand recognition, but this doesn't make them immune to removals.

The app infringes on user data privacy

When it comes to user data access, neither Apple nor Google grants it lightly. If your application requires it, you must clearly justify why that data is needed, explain how your app will use it, and outline the measures in place to protect it.

Since 2021, Google has been requiring developers to disclose data collection, sharing, and security practices in the Data Security section. In 2023, Google also updated its Google Play Protect, a built-in free threat detection service, by enhancing it with a real-time scanning tool.

On Apple's side, starting May 1, 2024, apps that don't disclose their use of API and data use in their privacy manifest file are denied access to the ecosystem.

⚠️ For healthcare apps, compliance is even more complex on both platforms. On App Store, any app that leverages HealthKit data, personal health information, or other sensitive user data must have a privacy policy in place and obtain explicit user consent. In the same vein, Android apps must have their data collection, sharing, and security practices detailed in the Data Safety section and the Health apps declaration form.

The app provides little value

If an app fails to deliver value or provide users with necessary functionality, it's likely to be flagged for removal. That includes spammy apps, low-quality clones, or those built just to generate ad revenue. Misleading apps are also lumped into this category.

An unresponsive button, differing app icons, or app descriptions that talk big are common triggers for rejections on both platforms. For example, after the start of the COVID-19 pandemic, both Apple and Google cracked down on all the coronavirus-related applications that were not published by health organizations or governments, to prevent the spread of misinformation.

The app is abandoned

Both platforms have a significant number of abandoned apps — apps that have not been updated in two or three years. On the Play Store, neglected apps amount to around 1.3 million apps, while Apple has around 496 thousand. Both app stores tend to either hide or purge such apps because they don't take advantage of the latest version of Android and iOS, new APIs, or new development methods.

For Apple apps, the bar to get in and stay is even higher: if the app has not been downloaded at all or only a few times during a rolling 12-month period, it's in for potential removal from the App Store.

The app features restricted content

Both Apple and Google impose strict content restrictions on applications to make sure the app creates a safe space for all users. To keep your apps in stores, make sure you avoid content that includes:

- sexual or pornographic explicit content;

- defamatory, discriminatory, or mean-spirited content;

- things that potentially endanger children;

- graphic depictions or descriptions of violence or violent threats to any person or animal;

- bullying and harassment;

- realistic portrayals of people or animals being killed, maimed, tortured, or abused;

- instructions on how to engage in violent activities like bomb or weapon-making, or facilitate the purchase of firearms or ammunition;

- self-harm, suicide, eating disorders, choking games, or other acts that may result in injury or death;

- bullying and harassment;

- hate speech;

- reference to sensitive events like a disaster, atrocity, conflict, or death;

- illegal activities;

- inflammatory religious commentary or inaccurate or misleading quotations of religious texts;

- inappropriate user-generated content.

While most of this content can be easily flagged and removed by app owners before the app release, user-generated content is harder to control. We recommend using strong moderation tools, reporting mechanisms, and filters to keep harmful content at bay.

Orangesoft’s case

Our team partnered with a dating app startup to develop an application for Android and iOS. Given the nature of the application and the strict guidelines of Apple and Google, we dedicated significant effort to studying the Developer Program policies and drilling down into explicit content restrictions.

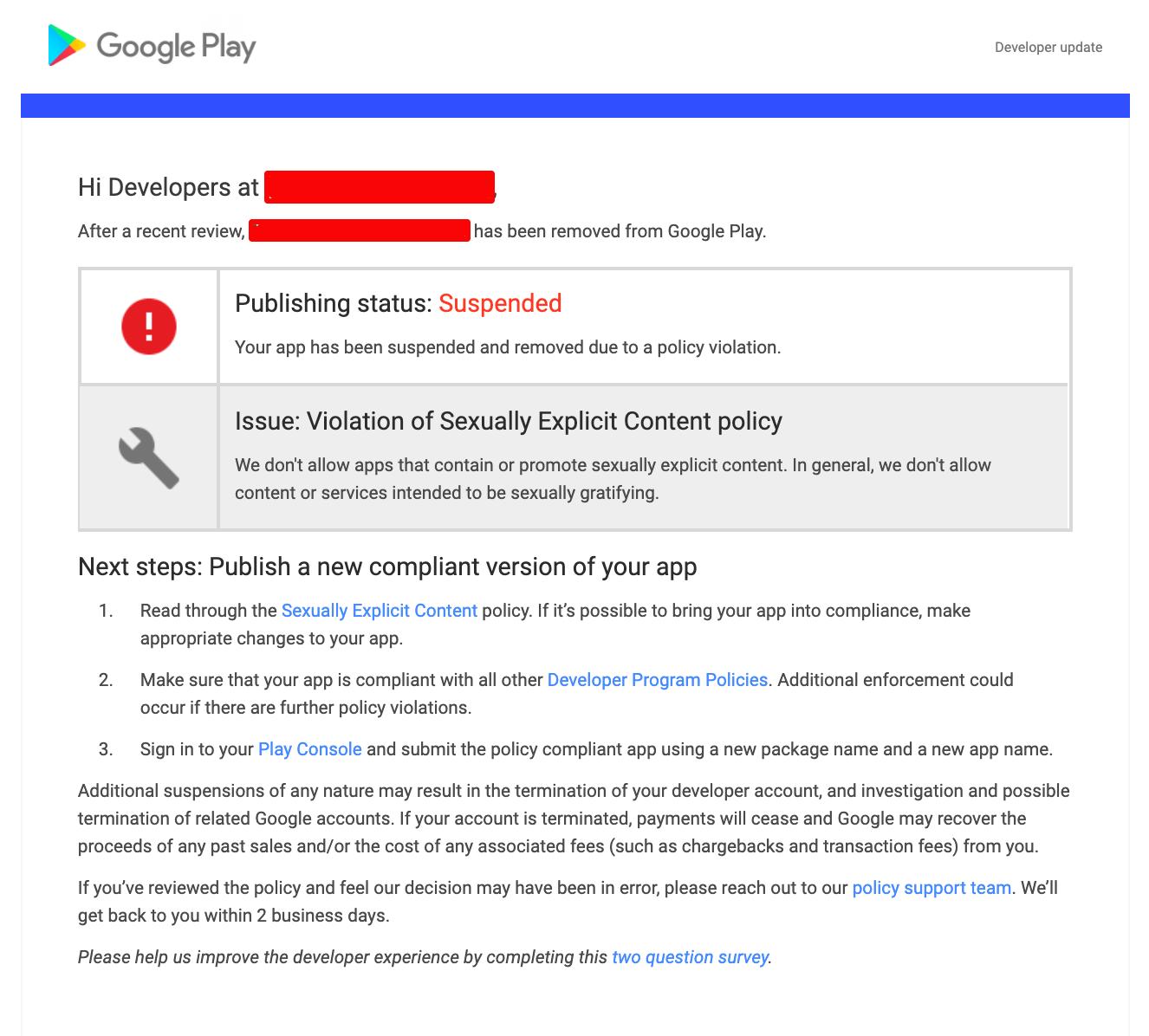

The app was approved by Google just a few days after submission and was pending publication. We planned to submit an iOS version the week after and then make them both public at the same time. However, a week after the review, we received an email stating that the app had been suspended and removed from the Google Play Store.

Usually, Google sends a warning email, giving developers a chance to fix the issues and resubmit the app. But that wasn't the case with the dating app. A few hours later, we found out that our client's developer account was terminated without any warning.

We thought that the reviewer got the wrong idea about the app because of its provocative chat scenario titles, such as “sex” and “sex and friendship.” We revisited Google's statement about inappropriate content and didn't find any mention of any specific prohibitions against sexually suggestive terms in the guidelines. What's more, our app wasn't the first to use such terms; some other applications on Google Play contained such terms as "swingers," "threesome," and "BDSM."

☝️On July 8th, 2020, Google announced an update to the Developer program policies. Among others, they added one more paragraph to the Sexual Content and Profanity section:

"Content that is lewd or profane - including but not limited to content which may contain profanity, slurs, explicit text, adult/sexual keywords in the store listing or in-app."

Now that explains why the app could have been deleted from the Play Store.

Within the following week, we sent a string of emails and appeals, but they were all met by several automated and unhelpful answers from Google.

The main issue in such situations is that all emails and appeals are received and answered by Google bots. So, in this case, you have only two options:

- Write articles and posts on social media and platforms like Reddit or Medium, hoping to catch the attention of Google's review team.

- Try reaching out directly to Google's Business Development or Business Relations teams. You can search for them on LinkedIn, Facebook, or your personal network.

We chose the second option, and soon our client and the owner of the dating app managed to make a detailed appeal to the right person. A few days later, the client's developer account was unblocked.

We also removed the term “sex” and changed the chat scenarios' titles. After that, our developers sent a new APK file for review. The submission process went without a hitch, and the app was published on both the App Store and the Google Play Store.

The app fails to comply with local laws

If your application caters to the foreign audience, make sure to understand local laws and the broader social, cultural, and political context of your target market. Even if your app keeps to all platform policies, it can still be ousted from the app store at the request of local authorities. For example, in 2024, Apple received 1,730 government requests to take down apps from its app store.

| Country | Apps removed |

|---|---|

| 🇨🇳 China mainland | 1,307 |

| 🇷🇺 Russia | 171 |

| 🇰🇷 South Korea | 79 |

| 🇺🇦 Ukraine | 55 |

| 🇯🇴 Jordan | 50 |

| 🇮🇳 India | 34 |

| 🇪🇬 Egypt | 9 |

| 🇮🇩 Indonesia | 9 |

| 🇹🇷 Turkey | 8 |

| 🇨🇭 Switzerland | 3 |

| 🇵🇰 Pakistan | 2 |

| 🇦🇷 Argentina | 1 |

| 🇦🇿 Azerbaijan | 1 |

| 🇲🇾 Malaysia | 1 |

This is often the case with healthcare apps. Telemedicine, prescription, mental health applications, and other healthcare apps must align with national regulations that usually require such applications to have proper licensing and certifications from the health authorities.

For example, in the EU, health apps may fall under the MDR regulations, which makes them subject to CE marking. In the US, even some wellness apps must follow HIPAA requirements if they handle protected health information. In this case, developers must submit a link to regulatory clearance documentation with the app.

Pre-publish checklist

Our developers, who have a track record of publishing over 200 apps on app stores, have prepared a checklist of things to be done before submitting an app to the app store. If you're not feeling confident in your app's readiness or just want to double-check, this checklist will guide you through the basics.

Conclusion

The process of app release can be challenging, especially if you're flying blind. However, as long as you follow the guidelines of the target platform and comply with applicable regulations, your application is set up for a long and happy life on the app store. Once it's in, don't forget to keep the application up to date, ensuring it has the latest security patches and performance updates.

![Why Google and Apple may remove your app and how to deal with that? [+free pre-publish checklist]](/_next/image?url=https%3A%2F%2Fimg.orangesoft.co%2Fmedia%2Fwhy-was-my-app-deleted-from-the-app-store-2.jpg&w=3840&q=75)