It takes a sizable amount of effort to develop high-quality IoT mobile applications. You need to break even more sweat to manually test them. We at Orangesoft know firsthand how challenging it can be to ensure that mobile applications have the right level of quality so they can seamlessly interact with third-party devices.

In this blog post, we'll share their battle-tested experience, unusual cases, QA bottlenecks, and risks inherent in IoT mobile projects.

Keep in mind that all examples in this article are based on the following two types of devices we have worked with:

- Optics with built-in video recorders, laser rangefinders, and Wi-Fi or BLE modules (Bluetooth Low Energy) to broadcast the video signal to a mobile app

- Lights equipped with high-frequency (HF) motion detectors, daylight sensors, and BLE modules for communication with the app

⚠️ The Internet of Things (IoT) is a blanket term for a myriad of solutions, products, and technologies that can mean different things to different people. In this blog post, we are talking specifically about sensor-enabled devices complemented with interfaces that can also network and communicate with each other and with the environment.

The double challenge of testing IoT mobile applications

Mobile application testing is challenging as is. Device-related differences such as OS types and screen sizes, a variety of development tools for different platforms, network types and providers, different application distribution channels, and diverse user groups are just a few of the testing challenges that should be accounted for.

Moreover, app or domain specifics always entail additional project and product risks that must be considered in quality assurance. Typical risks in mobile app development include:

- Many combinations for testing

- The need for a wide range of test devices

- Evolving guidelines of major app platforms, such as iOS and Android

- Underskilled testing specialists who lack experience and knowledge of the mobile application ecosystem and the process of mobile app development

All of the hurdles mentioned above can increase the time and cost of testing. But things get even trickier when the Internet of Things is involved. Not only do IoT solutions inherit the same testing challenges as mobile applications, but they also bring unique challenges to the table.

Here are some distinctive features of IoT solutions that impact their development and testing:

- Diverse smart devices, bundles, types of power and data transfer standards between devices, and types and versions of IoT device software

- A large range of output data generated by sensors and the requirements for sensor data collecting, processing, and storing

- The variety of product goals and user groups that, in turn, determine the simultaneous interaction with one or many devices, permissible distance for connection and data transfer, connection retention mode, and the need for specific network architecture

- The added benefit of having low power consumption and autonomy

- Smart product software might need periodic updates

- Security and reliability requirements

Input and output data, input fields and values

This point might seem basic, but it doesn’t make it less important. Several projects in our experience have used custom presets to configure the operation of devices. In this case, the user needs to set values for environmental parameters, which are physical quantities that can be available in different units. Sometimes, a user might need to define ranges of time values for different modes of operation.

In other words, we are talking about a spectrum of test cases related to unit conversions, rounding and displaying integers, displaying floating point numbers and different digits, and entering negative values. Sending incorrect values to the device and lack of validation can lead to defects in the device’s operation.

To prevent testing mistakes, you should have a detailed description of all restrictions for input fields that can be tested statistically before developing functionality. Regression testing for screens with so many boundary values is a great candidate for automation testing. This is especially true for cases where creating a set of customizations is a prerequisite for testing the related functionality.

Sensory evaluation of test results

A smart lighting system allows you to set a changing light level (e.g., fading and flickering) and to vary light levels based on motion sensors. In this case, the lighting itself can often be an indicator of a successful test, the result of which we can perceive with our eyes. However, this measurement method is prone to observational error.

Also, the perceived brightness changes during the course of a day, which may impact the test results. For example, the 50% brightness level of LED lamps does not look the same during nighttime and daytime.

It’s also crucial to carefully select the test data to facilitate evaluating auditory- and visual-based testing results. For example, the test data should contain a light with the intensity set to 100% and the intensity set to 0%. In this case, the test results can be clearly perceived, unlike the combination of 100% intensity and 60% intensity. In the morning, 100% light intensity and 60% light intensity may appear as one continuous phase.

And what if the motion sensor’s sensitivity should be analyzed, too? This parameter is impossible to evaluate through sense perception, yet we are testing it with the black box method. To extract the test data, you must peer into the sensor specifications and its operating principle. If you still cannot identify the optimal test distance and motion sensitivity, you can make a small table with empirical data.

During one of our projects, we added a value for motion sensitivity to the lighting settings. This experiment led to discouraging results as the light went crazy during testing. It soon became clear that the default 50% sensitivity resulted in a lot of false positives. The motion sensors were triggered by eye-blinking somewhere in the background or by a breeze outside the window. Later, the results of this experiment laid the foundation for the test design.

System permissions

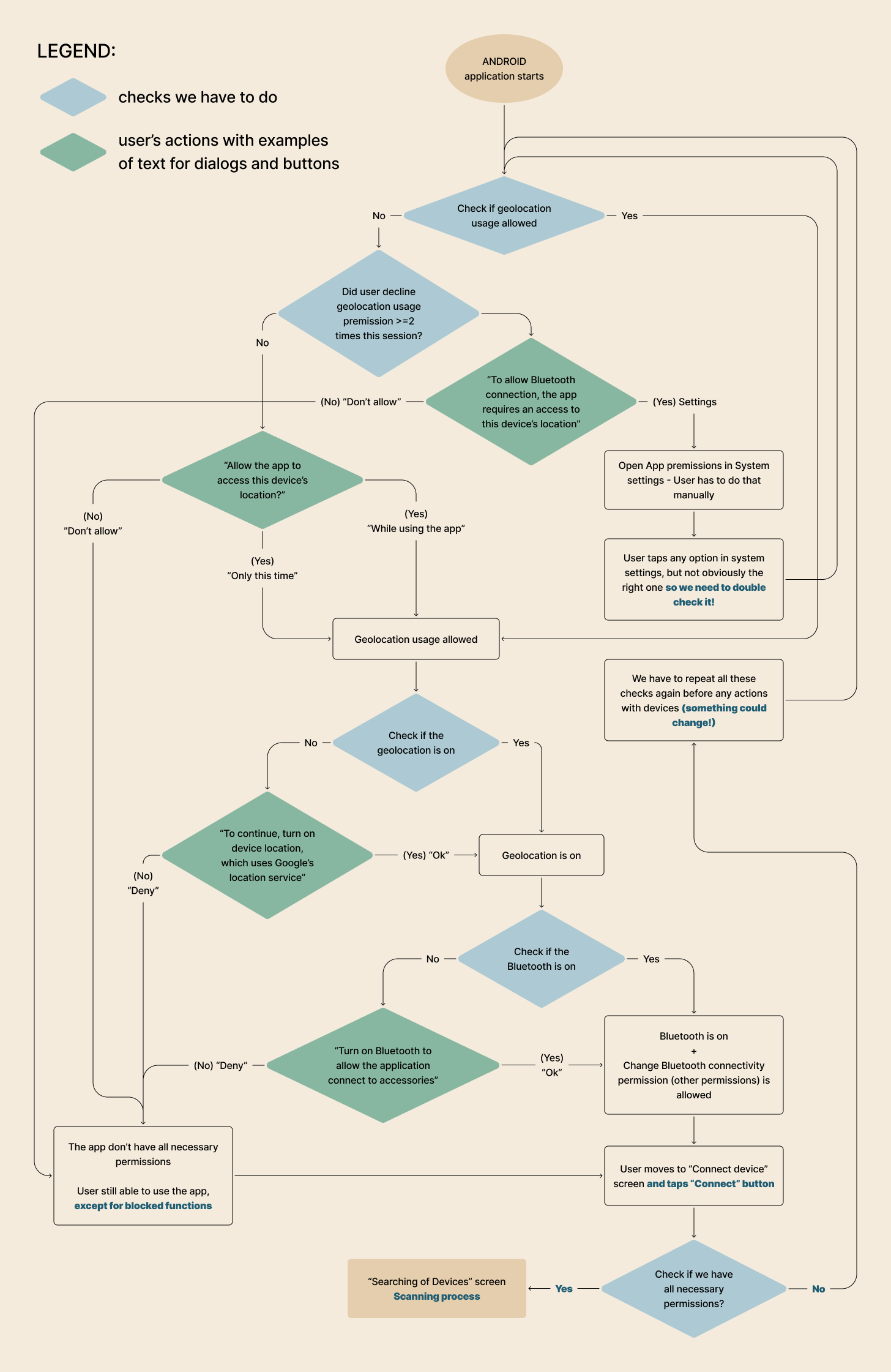

Mobile operating systems have fine-grained permissions for mobile apps. IoT-based mobile applications usually include a wide array of permission-based functionalities. This is especially the case for Android mobile applications that use Bluetooth. In this case, your mobile app must get permission to turn on and use Bluetooth and geolocation.

What does geodata have to do with it? In some cases, you may need it for more precise communication or consistency with security requirements. The most important thing here is that the user always has the choice and the ability to block any of these requests, which, in turn, may block access to the app’s functionality.

To avoid this, you have to carefully consider scenarios that lead to blocking (up to permission revocation from system settings). For each case, you need to redisplay system dialogs, alerts, and other graphical elements since some core functions, such as smart device connection and management, may not be available without the necessary permissions. To improve user experience, you can enable the product to display permissions before the user can use the functionality instead of bombarding the user with a cascade of dialogs at startup.

To support such tasks, QA engineers use combinatorial test designs, like decision table testing, that reflect system behavior under different combinations of user actions. Also, visualization tools (e.g., diagrams and schemes) help a lot in such cases. They allow you to facilitate understanding of these cases and simplify their coverage by the team.

Device file transfer settings

In some cases, the user may be able to save or send files like photos and videos from one device to another. In testing this case, you might want to pay extra attention to file naming by checking allowable characters and string length limits.

The most common operating systems have specific file naming rules. For example, the * and / characters are not allowed in *Unix systems. In iOS and macOS, you can't create an empty name or put spaces at the beginning of a string.

To avoid defects during testing, you should set limitations for file names and use them as a requirement for development. It’s better to stick to the same line length limit on all devices and use the same supported characters based on the specifics of the OS involved. You can also try saving a file with the same name that already exists in the system. This type of testing falls within the realm of compatibility testing.

Testing the connection

Device connectivity lies at the core of the Internet of Things products that use short-range device communication. Let’s zoom in on the key steps of this process, using Bluetooth Low Energy connectivity as an example. Keep in mind that the steps may differ for other communication technologies.

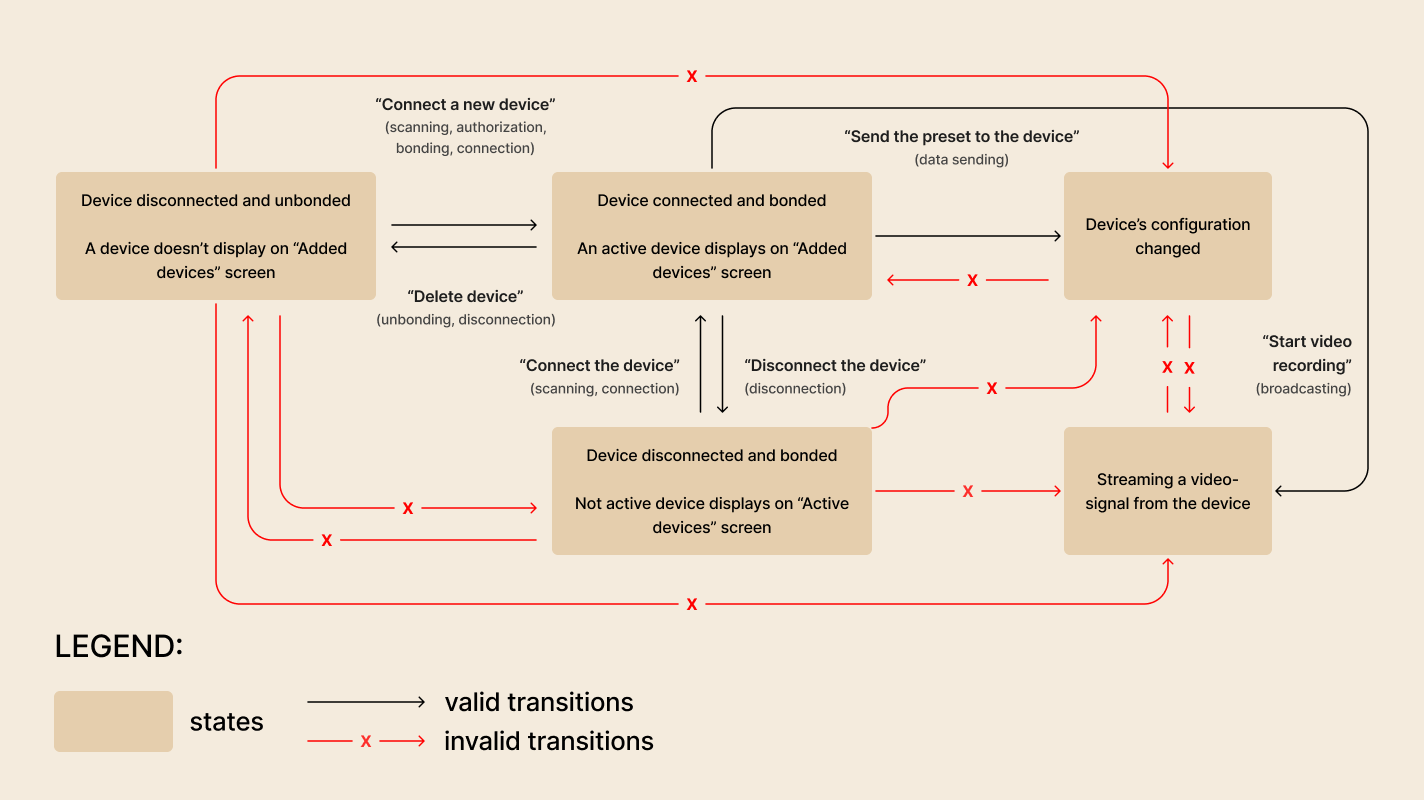

- The process of device connectivity starts with scanning — that’s when the device searches the available radius for other devices.

- Scanning is often accompanied by filtering to suppress unwanted devices from a signal.

- After that, pairing creates a communication channel between two devices, using one or more shared secret keys for further encryption of traffic.

- The user then manually enters a code to authorize a device.

- If authentication is successful, the process continues with bonding. Here, a system stores the keys created during pairing to form a trusted device pair for use in subsequent connections. Bonded devices can avoid re-authorization and connect automatically.

- Disconnection and unbonding are the final steps of the process. Disconnection can either occur automatically if the user doesn’t want to keep the connection for a long time, or the user can close the connection manually. Unbonding removes existing device pairs, so the next time you want to bond the devices, you’ll have to authorize the connection and create a new pair.

To ensure the connection quality, you need to run a number of tests to check scan results (excluding connected devices) and the authentication process, including code entering, its generation, and the user interface, if a user is involved.

When testing the connection, you also need to check how errors and state changes (“Added devices,” “Connected,” “Disconnected,” “Removed from My devices”) are displayed and whether they are displayed in real time. State transition tables and diagrams, as well as use cases (figure 2) are the most convenient way to ensure thorough test coverage for this functionality.

Besides connection flow testing, it’s also important to run a thorough check of all device interactions when reconnecting. Here, edge cases include the state of parallel connection to more than one device (or just beyond the set limit). Edge cases also occur when multiple clients (mobile applications) are connected to one device.

Connection retention modes and formation of networks with an unconventional network architecture

IoT products often require a long-lasting connection to a device. For example, this is the case when an IoT solution has to connect to an optical device that transmits a video signal. However, other IoT solutions implement a short-term connection for key operations. For example, a short-term connection is used to transmit settings to lighting fixtures, which can then operate autonomously. In either case, you need to run interrupt and reconnection tests to validate the functionality.

Testing becomes more challenging when an unconventional network architecture comes into the picture. For example, at one of our projects, a full mesh network was a core technical requirement. In this case, each network participant is also a switch. When the network contains a large number of devices, the connection is established with the participant with the best signal. That participant, in turn, acts as an intermediary, passing data to other participants.

Let's imagine that this network is distributed along a long corridor, longer than the Bluetooth range. This is very convenient, as the user can stand at any end of the corridor and switch the light modes of all lamps, even those located at a significant distance.

To prepare for a corridor test, you have to split the prototype into two power supplies, place the lamps in different corners of the room and go back a few steps so that you can communicate only with the lamps located close to you. In this case, you need to check whether the device is sending commands to the target device. If the connection hold mode is short term, you also need to test whether the application and the devices are able to quickly access different network participants.

When dealing with short-term connections, you might also find it useful to experiment with the connection hold time for optimization.

At one of our projects, the mobile application didn’t store device states, as those could be changed by the user. Therefore, to display the current states or to send commands, the application had to implement a point-to-point connection to the network. However, due to the full mesh network and a large number of lamps, the time to receive and send data increased significantly. After successively increasing the number of connected devices and running functional tests, the team decided to add a delay when holding the connection. As a result, the mobile app’s performance noticeably increased. We assumed that the user would perform several related actions in a row, and the added delay helped us reduce the number of long-term network connections.

Interrupt testing

The need for interrupt testing is quintessential to ensure high quality of IoT solutions. There are a number of operations that must either be performed entirely or not performed with a return to the initial state. These are known as atomic operations and may include creating entities and editing and deleting them. In IoT projects, the minimum number of operations that should be tested for interruption include:

- Connecting and disconnecting devices

- Receiving or transferring information/files between devices

- Receiving and updating new software versions of devices

Mobile applications themselves contain a lot of potential interruptions, including calls, messages and various notifications, lack of memory, charging, and switching between applications or putting the device on standby while the application is running.

Coupled with the IoT devices, mobile applications pick up additional interruptions, such as:

- Switching between 3G / LTE and offline networks (only if these communication protocols are used by phone carriers and the smartphone switches from one tower to another or if the device functions require an internet connection)

- Disconnecting from the power supply, discharging the battery of the smart device, discharging or turning off the connected device (smartphone)

- Going out of range (for example, the parking area for scooters) or beyond the communication path (relevant for short distances when using Wi-Fi, Bluetooth LE, Zigbee, and other protocols)

Each device pair must respond correctly to an interruption without disrupting the service. The running mobile application, in turn, must act as a status indicator for the user. In case of interruptions during atomic operations, the mobile application should display errors and also prevent the possibility of simultaneous actions by setting delays or blocking actions. For example, if you slowly unbond the device, you must be able to execute a few actions on the connected device without blocking the UI.

The possible distribution and installation of firmware are one of the first issues you should take into account when working with third-party devices. The implementation techniques for this functionality differ from project to project even if the approach uses over-the-air (OTA) updates.

In any case, keep in mind that this is a vulnerability that must be protected from interruptions by all participants. If possible, it should be reversible. Ideally, you should have a stock of spare devices for testing. If your set of test devices is highly limited, you should outsource update testing to the firmware development team.

Interrupt test cases come in different forms and shapes due to the differences in project specs, technologies, project goals, and implementation resources. Nevertheless, the majority of interrupt test cases are mandatory as they can be potentially blocking and can increase reputational risks and the risk of device failure.

You can add product-based solutions such as user engagement methods (notifications and SMS) and corrective actions to reduce risks and their consequences. For example, rental scooters can implement deferred payment calculations, forced stop, and blocking to prevent complete battery discharge and location tracking. However, these solutions have poor reliability and do not exclude risks completely.

The need for usability testing

In our practice, there was a case where the user had to manually enter a relative angular value that reflected wind direction relative to the direction of the device. To facilitate this task, our UI/UX design developed a dedicated interface element that helped the user set the angle.

When the user is involved in the setup process, you must make sure that the graphical elements for input and interactions are easy to use and that the purpose of all input parameters is clear to the user. To do that, you can embed hints, convenient pickers, default values, and a walk-through that takes the user through the key features.

Usability testing will help you understand the user behavior, run the necessary checks, and embed corrective actions into the solution. Also, product research and user-assisted usability testing (also known as beta testing and A/B testing) are essential testing techniques that contribute to building user-centric products.

IoT project testability

When it comes to IoT systems, testability is the hidden ingredient of successful testing. Being a core component of the testing strategy, testability refers to how easy or challenging it is to test a system or one of its components.

Testability implies receiving quick feedback on the quality of the product and the impact of the changes made. Therefore, it makes the development process more predictable, facilitates decision-making, and mitigates project risks.

Let’s dwell on the techniques that can help you enhance IoT software testability.

Logging

Overall, ensuring log coverage and access to the test log contents can be very simple, which is the case with adb logs for Android applications. However, based on the technology and platform, you may need testing access to the repository as well as special knowledge, or you may have to come up with another method for log extraction.

It may not seem like a priority task, but logging is essential for any IoT project. In testing, logs are invaluable assets that provide insights into root causes and give early warning on emerging issues.

If your log extraction mechanism isn’t fine-tuned, it may lead to duplication of work. Developers will have to repeatedly reproduce the bugs to obtain the necessary information and perform debugging. Filtering logs by trackid allows you to select the amount of information received during testing, including information from a specific device.

Usage data collection

You may need to develop a specific design solution to collect, transmit, and store usage data, but it can definitely help you reduce the costs of device maintenance. For example, usage data collection can help the owners monitor the condition of rented scooters, prevent breakdowns or loss, and provide a better user experience.

Test and project documentation

You don’t always have to document a detailed set of test cases. However, you might find it helpful to create checklists and an established, up-to-date project wiki that collects specifications and information related to device performance. These artifacts will help the team track the impact of changes, make testing and project management more transparent, and facilitate onboarding.

Well-defined, documented project requirements will lay a solid foundation for testing, allowing QA specialists to uncover defects before the functionality is implemented. This, in turn, reduces the cost of development and testing.

Optimal test environment

If the IoT ecosystem has a large number of network participants, the team should have a testing environment with the ability to reproduce and debug relevant errors. However, setting up a test environment that replicates the real environment is too expensive for the majority of projects. Having a test environment that meets the acceptance criteria is a good trade-off between effective and affordable testing.

In this regard, on-site testing and debugging in a test environment is a good way of getting the testing right. Emulators, device simulators, connection simulators, and other virtualization tools will also facilitate testing.

If the app development and testing occur before the team gets the testing devices, testers can use simulation models. These are algorithms that send mock device data. Simulation models are equally helpful in system testing of individual services and in load testing during the scale-up.

Independent team testing

IoT devices and services are often developed and tested by multiple teams that use different development lifecycle models for each object. Independent testing teams often work in silos with little to no cooperation with other testing teams. As a result, one testing team might lack sufficient knowledge about device performance due to the feedback and knowledge gaps.

On the brighter side, each of the testing teams has a thorough understanding of the product components they are responsible for. Also, each of the testing teams is well aware of the failures associated with a specific product component.

In this case, open discussion of tasks and issues and a joint search for use cases can be beneficial and enlightening for both the teams and the product being developed.

The bottom line

In this blog post, we’ve told you about some trends and cases in quality assurance of projects that involve mobile applications and the Internet of Things. A focus on reliability testing should be the most important aspect of your quality assurance strategy, and all cases listed here serve this purpose. Security is another crucial aspect of quality assurance. However, we’ve omitted it from this post on purpose, as security testing requires a comprehensive approach, which is hard to describe in one blog post.

No IoT mobile project is the same. Different mobile and connected device specs, communication standards, implementation methods, and project goals can impact the way you perform quality assurance. A testing strategy is what helps to gain a solid understanding of the project specifications and identify the optimal testing approach, techniques, and tools.

When building a testing strategy and planning the testing process, you should think about how the team can implement testing at early stages (with static methods) and maintain testability during the project. You should also think about how you can assess the feasibility of automation and how you can analyze project and product risks and adapt testing accordingly.

Also, discussing expectations and quality assurance goals with all the stakeholders is something that can reveal additional risks and priorities. We hope that the cases we’ve described in the article will shed light on the testing risks and issues in IoT projects.