Today, mobile applications are mainly associated with smartphones and wearables from high-profile and well-known manufacturers. However, software development companies often have to deal with the complexity of unusual or rare hardware. In these cases, mobile developers require thorough expertise to build an out-of-the-box solution for a stable, high-performing product.

For example, how about an Android-powered device with a circular non-touchable screen, which connects to supported vehicles via a USB port and is used as a dashboard on a steering wheel? At first glance, this equation may appear too complicated and far-fetched for mobile development. Вut, after a closer look, you’ll see that the Android framework has all it takes to implement a solution for that type of hardware.

In this article, we'll explore the challenges of developing software for this type of device and zoom in on the innovative solutions we applied to make it work. Whether you're a software developer or simply curious about the intricacies of mobile development, our case study showcases a bunch of insights for you and your team.

Main challenges

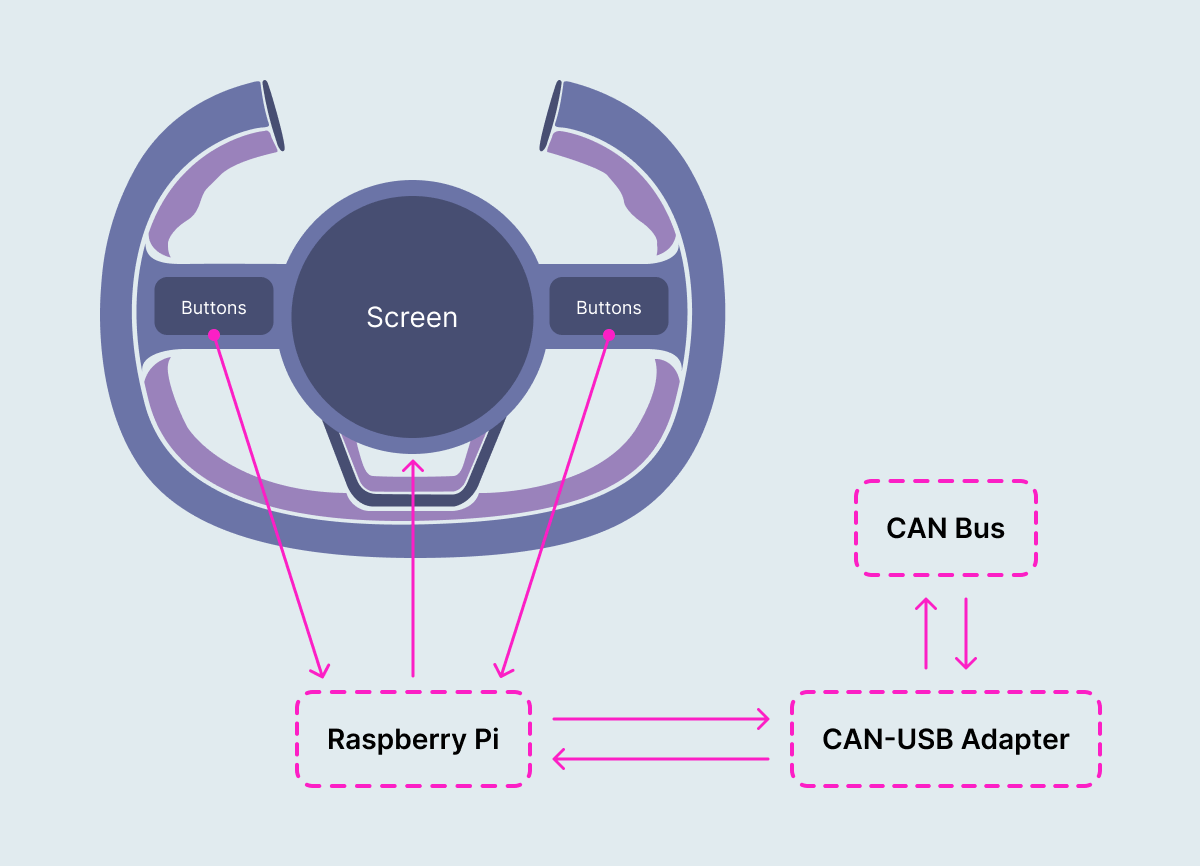

The device we were working with was based on a Raspberry Pi with Android on board, a plugged round display with a non-touchable surface, and hardware buttons, all assembled on a car steering wheel. The car systems were connected via the controller area network (CAN) bus interface, and all CAN data was transmitted to a CAN-USB adapter, which in turn was plugged into a Raspberry Pi base. At first, we considered using a Bluetooth adapter to connect the Raspberry Pi to a CAN bus, but in this particular case, we have to maintain a stable non-breakable connection. That’s why we chose a USB connection instead of Bluetooth.

So, our goal was to create an application that runs on that round screen on the steering wheel and grabs various car data (speed, torque, battery level, etc.) from a CAN bus via a USB adapter.

We began with decomposing the project into the main tasks. Among them, the most challenging ones were:

- Establishing a connection between a Raspberry Pi base and a vehicle using a USB port.

- Implementing the circular screen layout.

- Navigating inside the application using hardware buttons on a steering wheel.

- Inputting data without a keyboard.

We’ll look at each of these next.

Establishing a USB connection

There are a lot of guides and tutorials on how to set up connections between Android and USB devices. Luckily for us, the CAN-USB adapter vendor provided a convenient library for that purpose. It contained a USB driver, initialization logic, and all required interfaces to send and receive data. Briefly, in order to set up a USB connection, an application has to do the following steps:

- Create an intent filter and run a broadcast receiver to detect connected devices, along with filtering devices by their vendor ids.

- Ask the user for permission to connect to the USB device if not already obtained.

- Communicate with the USB device by reading and writing data on the appropriate interface endpoints.

We used a ready-to-go driver, so all initialization logic was encapsulated into UsbDriver class:

class USBConnectFragment : Fragment() {

private val connectViewModel: ConnectViewModel by viewModels()

...

lateinit var driver: UsbDriver

...

override fun onViewCreated(view: View, savedInstanceState: Bundle?) {

super.onViewCreated(view, savedInstanceState)

viewLifecycleOwner.lifecycleScope.launch {

viewLifecycleOwner.repeatOnLifecycle(Lifecycle.State.CREATED) {

launch { connectViewModel.isRealDataSourceUsed.collect(::selectDataSource) }

}

}

}

...

private fun selectDataSource(isRealDataSourceUsed: Boolean) {

if (isRealDataSourceUsed) {

connectViewModel.onDeviceSelected(null)

} else {

driver = UsbDriver(requireContext()) {

onDeviceSelected(driver)

}

}

}

...

private fun onDeviceSelected(usbDriver: UsbDriver) {

connectViewModel.onDeviceSelected(USBWrapper(usbDriver))

}

}After a USB device instance is obtained and selected, the next step is initialization. This involves opening a device and setting different parameters like baud rate (data transmission rate), configs, filters, etc. All this logic was already implemented inside the vendor's library.

A CAN bus does not simply broadcast data to a device; there’s no option to just listen to data, like in a broadcast receiver. To retrieve data from the bus, we have to periodically send properly formatted requests. We cannot disclose the exact details of a request due to the NDA, but in brief, it contains information about the operation’s purpose (read/write), the id of a parameter (like speed, torque, or battery) and an optional value of a parameter (for write operations).

So, since we have to constantly display various vehicle parameters — for example, speed, which changes gradually — we need to design some kind of periodic request emitter, a class that sends requests to the CAN bus at some fixed rate:

class PeriodicRequestEmitter @Inject constructor() {

@Inject

lateinit var requestGenerator: AbstractRequestGenerator

private lateinit var tickJob: Job

private lateinit var readJob: Job

private val pendingRequests = ArrayList<AbstractDataRequest>()

@Volatile

private var lastUpdateTime: Long = 0L

private var tickCount: Long = 0L

private val mutex = Mutex()

companion object {

const val SPEED_REQUEST_DELAY = 50L

}

fun startEmitRequests(stream: MutableSharedFlow<AbstractDataRequest>,

responses: MutableSharedFlow<AbstractDataResponse>

) {

tickJob = GlobalScope.launch(Dispatchers.IO) {

while (isActive) {

val waitTime = SPEED_REQUEST_DELAY - (System.currentTimeMillis() - lastUpdateTime)

if (waitTime > 0) {

delay(waitTime)

}

tick(stream)

lastUpdateTime = System.currentTimeMillis()

}

}

readJob = GlobalScope.launch(Dispatchers.IO) {

responses.collect {

trySendNext(stream)

}

}

}

private suspend fun tick(stream: MutableSharedFlow<AbstractDataRequest>) {

val readyToStart = pendingRequests.isEmpty()

addSpeedRequest()

if (readyToStart) {

trySendNext(stream)

}

tickCount++

}

private fun addSpeedRequest() {

pendingRequests.add(

requestGenerator.createRequest(

CANAction.READ,

CANOperation.CURRENT_SPEED

)

)

}

private suspend fun trySendNext(stream: MutableSharedFlow<AbstractDataRequest>) {

mutex.lock()

if (pendingRequests.isNotEmpty()) {

val request = pendingRequests.first()

stream.emit(request)

pendingRequests.remove(request)

}

mutex.unlock()

}

suspend fun stopEmitRequests() {

tickJob.cancelAndJoin()

readJob.cancelAndJoin()

}The startEmitRequest method here is called from the repository, and we passed responses: MutableSharedFlow as an observable field, just to know when to send another request with the tick method. Here, we have two jobs: tickJob writes to a stream with the tick method every 50 ms, and readJob reads from a stream and sends the next request if possible.

PeriodicRequestEmitter works inside the repository class:

class USBCANDataRepositoryImpl @Inject constructor(

private val requestManager: AbstractRequestManager,

private val requestEmitter: PeriodicRequestEmitter

) : CANDataRepository() {

lateinit var readingJob: Job

lateinit var writingJob: Job

val responses = MutableSharedFlow<AbstractDataResponse>()

val requests = MutableSharedFlow<AbstractDataRequest>()

override fun onDeviceSelected(device: DeviceWrapper?) {

if (device?.getDevice() != null) {

this.usbDriver = device.getDevice() as UsbDriver

}

if (usbDriver != null) {

initCANBaudRate()

val isDeviceInitialized = tryInitDevice()

connected.value = isDeviceInitialized

if (isDeviceInitialized) {

startWriting()

requestEmitter.startEmitRequests(requests, responses)

}

}

}After the startEmitRequests method places a periodic request to a queue, the method startReading takes a request from that queue, sends it to the USB connection, fetches data from the response, and parses it. The resulting response is then emitted to a responses MutableSharedFlow, which in turn is observed in the UI, which displays periodically updated data (the car’s speed, for example).

Layout of the circular screen

Our next challenge was to find out how to work with a circular screen. Obviously, the usual linear layouts were not very applicable, but also ConstraintLayout wasn’t good enough because, in this case, we had to transform a polar coordinate system into a linear one. So, after some research, we found a very convenient component called CircularFlow. CircularFlow is a descendant of a VirtualLayout. These virtual layouts in a constraint layout are virtual view groups that participate in constraint and layout but don’t add levels to the hierarchy. Instead, they reference other views in the ConstraintLayout to complete the layout.

CircularFlow is pretty easy to use — you have to specify the ids of N elements, which are to be laid out circularly, then specify angles and radiuses. In the example below, we didn’t use any linear layouts to place “artist” below “song”; it’s all circular but with different radiuses.

<androidx.constraintlayout.helper.widget.CircularFlow

android:id="@+id/playerGroup"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

app:circularflow_angles="0, 0, 0, 130, 230, 180"

app:circularflow_radiusInDP="108, 78, 0, 80, 80, 97"

app:constraint_referenced_ids="songName, songBand, play, playNext, playPrev, volume"

app:layout_constraintStart_toStartOf="parent"

app:layout_constraintTop_toTopOf="parent" />So, with the help of CircularFlow , you can easily build complex layouts with circular elements.

Navigating the screen

The screen we were working with was a custom 5.0" round display, and the absence of a touch option at first sight seemed like a huge inconvenience. The screen was mounted in the center of the steering wheel, and two sets of hardware buttons were mounted to the left and right of the screen, so a driver could easily navigate through the app while keeping their hands on the wheel without much distraction from driving, which was our main security concern and is one of the key requirements for automotive applications.

The easiest possible way to catch hardware buttons events was to listen to them in activity methods like onKeyDown and onKeyUp.

override fun onKeyDown(keyCode: Int, event: KeyEvent): Boolean {

…

}

override fun onKeyUp(keyCode: Int, event: KeyEvent): Boolean {

…

}Ok, but where do we store these events and how do we pass them to Fragments? We created a class called KeyEventHandler.

class KeyEventHandler(private val screenNavigatorViewModel: ScreenNavigatorViewModel): Callback {

override fun onKeyDown(keyCode: Int, event: KeyEvent): Boolean {

if (keyCode == KeyEvent.KEYCODE_DPAD_LEFT) {

event.startTracking()

return true

}

return false

}

override fun onKeyUp(keyCode: Int, event: KeyEvent): Boolean {

log(event.toString())

if (keyCode != KeyEvent.KEYCODE_DPAD_LEFT) {

screenNavigatorViewModel.onEvent(event.asButtonEvent())

} else {

screenNavigatorViewModel.onPresumablyLongEvent(event)

}

return true

}The main purpose of this handler was to intercept events and pass them to another class, ScreenNavigatorViewModel, a singleton view model which holds events in a Flow object:

@HiltViewModel

class ScreenNavigatorViewModel @Inject constructor(): ViewModel() {

private val eventFlow = MutableSharedFlow<HardwareButtonEvent>()

val events: Flow<HardwareButtonEvent> = merge(

eventFlow

.chunked(LONG_CLICK_BUFFER, LONG_CLICK_INTERVAL)

.mapNotNull { events ->

val groupEvents = events.groupBy { it.keyCode }

return@mapNotNull if (groupEvents.size > 1) {

events.last().asButtonEvent()

} else {

if (events.size > 1) {

events[0].asLongButtonEvent()

} else {

events[0].asButtonEvent()

}

}

}

).shareIn(

viewModelScope,

SharingStarted.WhileSubscribed()

)

fun onEvent(newEvent: HardwareButtonEvent?) {

newEvent ?: return

viewModelScope.launch {

eventFlow.emit(newEvent)

}

}According to the project specifications, we have to differentiate normal key events and long click events, but it turned out that the method onKeyLongPress was not triggering for some reason. Instead, when any button was pressed and held for more than a second, the onKeyEvent method was firing rapidly. To beat that problem, we decided to use the chunked method to group events. It works as follows: if the buffer (first argument) is filled during the specified interval (second argument), then the flow emits events. In our case, it was 20 events and 600 ms. This way, we can distinguish single clicks from long clicks by simply looking at the size of the events in the mapNotNull method.

That’s it. Now any class which wants to keep track of key events should inject this view model and subscribe to flow events:

@AndroidEntryPoint

class MediaFragment : BaseMediaFragment() {

…

private val screenNavigatorViewModel: ScreenNavigatorViewModel by activityViewModels()

override fun onViewCreated(view: View, savedInstanceState: Bundle?) {

super.onViewCreated(view, savedInstanceState)

…

viewLifecycleOwner.lifecycleScope.launch {

repeatOnLifecycle(Lifecycle.State.RESUMED) {

launch { screenNavigatorViewModel.events.collect(::onHardwareButtonEvent) }

}

}

}

private fun onHardwareButtonEvent(event: HardwareButtonEvent) {

// event handling

}Inputting data

The last major challenge was data input. Our application contains a turn-by-turn navigation feature and a media player, and both features require a search option. Our main concern was driver distraction — any type of keyboard input requires concentrating on the screen, and besides that, typing by choosing keys from a small circular keyboard using buttons on the steering wheel doesn’t look like a user-friendly solution. After some discussion, we landed on voice input and speech recognition.

When it comes to integrating speech recognition capabilities into Android applications, developers have a powerful tool at their disposal: Android’s SpeechRecognizer. This is a built-in speech recognition service included in the android.speech package, providing a simple and effective way to recognize speech input from users.

By using the SpeechRecognizer API, developers can easily incorporate voice-activated features into their applications, allowing users to perform tasks such as dictation, voice search, or command recognition simply by speaking. The API is designed to be user-friendly, with intuitive methods and parameters that make it easy to customize settings such as language and to provide feedback on the recognition process.

First of all, that feature requires permission to record audio:

private var requestPermissions =

registerForActivityResult(ActivityResultContracts.RequestMultiplePermissions()) { permissions ->

if (permissions.entries.isNotEmpty()) {

if (permissions[RECORD_AUDIO] == true) {

initRecognizer()

} else {

binding.searchStatus.text =

resources.getText(R.string.search_no_voice_permission)

}

}

}

…

override fun onViewCreated(view: View, savedInstanceState: Bundle?) {

super.onViewCreated(view, savedInstanceState)

if (ContextCompat.checkSelfPermission(

requireContext(),

RECORD_AUDIO

) != PackageManager.PERMISSION_GRANTED

) {

requestPermissions.launch(arrayOf(RECORD_AUDIO))

} else {

initRecognizer()

}

…

}A SpeechRecognizer object needs Context for initialization, so we can’t keep it in ViewModel, only in Fragment. The InitRecognizer method creates an intent and sets RecognitionListener:

private fun initRecognizer() {

speechRecognizerIntent = Intent(RecognizerIntent.ACTION_RECOGNIZE_SPEECH)

speechRecognizerIntent.putExtra(

RecognizerIntent.EXTRA_LANGUAGE_MODEL,

RecognizerIntent.LANGUAGE_MODEL_FREE_FORM

)

speechRecognizerIntent.putExtra(RecognizerIntent.EXTRA_LANGUAGE, Locale.US)

speechRecognizer = SpeechRecognizer.createSpeechRecognizer(requireContext())

speechRecognizer.setRecognitionListener(

object : RecognitionListener {

override fun onReadyForSpeech(params: Bundle?) {}

override fun onBeginningOfSpeech() {

lifecycleScope.launch {

voiceSearchViewModel.setRecognitionStatus(RecognitionStarted)

}

}

…

override fun onError(error: Int) {

lifecycleScope.launch { voiceSearchViewModel.setRecognitionStatus(RecognitionFailed(error))

}

}

override fun onResults(results: Bundle?) {

val data: ArrayList<String>? = results?.getStringArrayList(SpeechRecognizer.RESULTS_RECOGNITION)

data?.get(0).let { result ->

lifecycleScope.launch {

voiceSearchViewModel.setRecognitionStatus(

RecognitionSucceeded(result)

)

}

}

}

…

}

)

speechRecognizer.startListening(speechRecognizerIntent)

}Inside listener methods, we pass results to ViewModel, which is responsible for processing these events. RecognitionStatus is a sealed class with three descendants:

sealed class RecognitionStatus

object RecognitionStarted : RecognitionStatus()

class RecognitionFailed(val errorCode: Int) : RecognitionStatus()

class RecognitionSucceeded(val result: String?) : RecognitionStatus()Once recognition is finished, ViewModel emits data into actions and recognizedText:

@HiltViewModel

class VoiceSearchViewModel @Inject constructor() : ViewModel() {

val recognizedText: MutableStateFlow<String?> = MutableStateFlow(null)

val actions: MutableSharedFlow<RecognitionState> = MutableSharedFlow()

suspend fun setRecognitionStatus(status: RecognitionStatus) {

when (status) {

RecognitionStarted -> {

actions.emit(RecognitionState.RECOGNITION_STARTED)

}

is RecognitionFailed -> {

val error = status.errorCode

if (error != SpeechRecognizer.ERROR_CLIENT) {

when (error) {

SpeechRecognizer.ERROR_NO_MATCH -> {

actions.emit(RecognitionState.NO_MATCH_ERROR)

actions.emit(RecognitionState.RECOGNITION_STARTED)

}

else -> {

actions.emit(RecognitionState.COMMON_ERROR)

}

}

}

}

is RecognitionSucceeded -> {

status.result?.let {

recognizedText.emit(it)

actions.emit(RecognitionState.RECOGNITION_SUCCEEDED)

}

}

}

}Then, back in Fragment, we process recognized text or show a recognition error. If the text is not recognized or is badly recognized, the listening process starts over again.

override fun onViewCreated(view: View, savedInstanceState: Bundle?) {

. . .

viewLifecycleOwner.lifecycleScope.launch {

viewLifecycleOwner.repeatOnLifecycle(Lifecycle.State.RESUMED) {

launch { voiceSearchViewModel.recognizedText.collect(::showRecognizedText) }

launch { voiceSearchViewModel.actions.collect(::processAction) }

}

}

}

fun showRecognizedText(text: String?) {

text?.let {

binding.searchResult.setText(text)

binding.forwardButton.visibility = View.VISIBLE

binding.backButton.text = resources.getString(R.string.search_clear)

}

}

private fun processAction(action: RecognitionState) {

when (action) {

RecognitionState.RECOGNITION_STARTED -> {

binding.searchStatus.text = resources.getText(R.string.search_listening)

binding.searchResult.setText("")

showVoiceWave()

}

RecognitionState.RECOGNITION_SUCCEEDED -> {

binding.searchStatus.text = ""

speechRecognizer.stopListening()

}

RecognitionState.NO_MATCH_ERROR -> {

binding.searchStatus.text =

resources.getString(R.string.search_recognition_error_no_match)

}

RecognitionState.COMMON_ERROR -> {

binding.searchStatus.text =

resources.getString(R.string.search_recognition_error_common)

}

RecognitionState.RECOGNITION_RERUN -> {

binding.forwardButton.visibility = View.GONE

binding.backButton.text =

resources.getString(eu.bamboo.iamauto.core.R.string.cancel)

speechRecognizer.startListening(speechRecognizerIntent)

}

RecognitionState.EXIT -> {

findNavController().popBackStack()

}

}

}So, after the text is recognized and obtained, we can use it to run searches on maps or in media libraries.

To sum up

Thanks to our can-do attitude, we overcame the main challenges of our project and delivered a stable, MVP-ready product. This concept implementation brought us a step closer to a smartphone-driven vehicle control system that holds great potential for transforming a conventional car into an automotive ecosystem.

Smartphones on wheels with the convenience of one-click administration, new infotainment options, and centralized electronics architecture — the connected future of vehicles doesn’t seem so far off anymore, right? Applications like ours are expanding the innovation pipeline while demonstrating the holistic driver experience connected vehicles can deliver.

We at Orangesoft stand at the forefront of innovation and help OEMs move in the direction of software-defined vehicles by building up software capabilities, developing new business models for software, and providing the needed capacity to innovate.